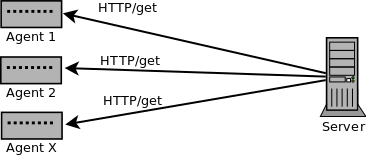

REST interface polling recorder

Lately I was working on project where Server periodically polled HTTP REST interface of Agents and processed obtained data. There was only data flow from Agent to Server, so Server only reacted on Agent events and had no chance to influence Agent behavior.

Because Agent was installed on special industry hardware equipment it was pretty expensive to run such Agent. Beside that laboratory test was not really similar to production usage. Writing Agent emulator from scratch would be hard from 2 reasons:

- Data are complex

- We were not sure how Agent behaves (missing spec)

So we came to conclusion we need a tool which would record REST interfaces state (JSON document in our case) during time and then replay in form of Agent emulator. Then we can record data in production and use them for development and testing. But how to store such documents into dump file? Only saving polled documents would be too disk intensive. Unfortunately there was room for optimization:

- If document was not changed since last poll then do not save it again. During emulation is used last document until new is found in dump.

- If document is changed just slightly (just a few fields) it may be stored if form of diff against previous state. During emulation is diff applied to previous state and new state is calculated.

- If it makes a sense, diff or full document may be compressed. In our case using GZIP.

- Store complete document every N minutes (same logic as key frame in movie compression field) so if file is broken or cut, emulation can be recovered.

Lines are stored into dump in following format, consisting of columns separated by pipe character:

- timestamp

- agent id

- interface id

- full x diff flag

- compressed x uncompressed flag

- data, stored as Base64

Currently is dump plain text file, for better compression could be of course used binary format. Using this approach we are able to record one month of Agent behavior into 50MB file!

Initially we planned to use dump solely for emulation purposes. But later on we found out many more possible use cases, like ultimate data for regression tests. For that we decided to connect data from dump directly to Server - so HTTP communication was removed. Then it was possible to test/debug but debugging week data would take a week. Hmmm.

Soon idea of working in fake real time appeared. We changed all code to not use Java functions that return real time (like new Date()) but instead TimeProvider passed by dependency injection. In production code is passed TimeProvider that returns real time, in tests/debugging is passed TimeProvider that returns current time in dump. So we can have exact test that would take a week in 1 minute. Wow.

And bonus usage: as I mentioned earlier we lacked specification of Agent behavior. But now we have dumps for many Agents in various environments lasting many weeks. We are able to analyze this data and provide to developers in clean and comprehensive form - graphs, tables, statistics.

Tags: